Notes on various LLMs and the techniques used to make them.

LLMs are better thought of as "calculators for words" - retrieval of facts is a by-product of how they are trained, but it's not their core competence at all.

Simon Willison on HN, which he later expanded on

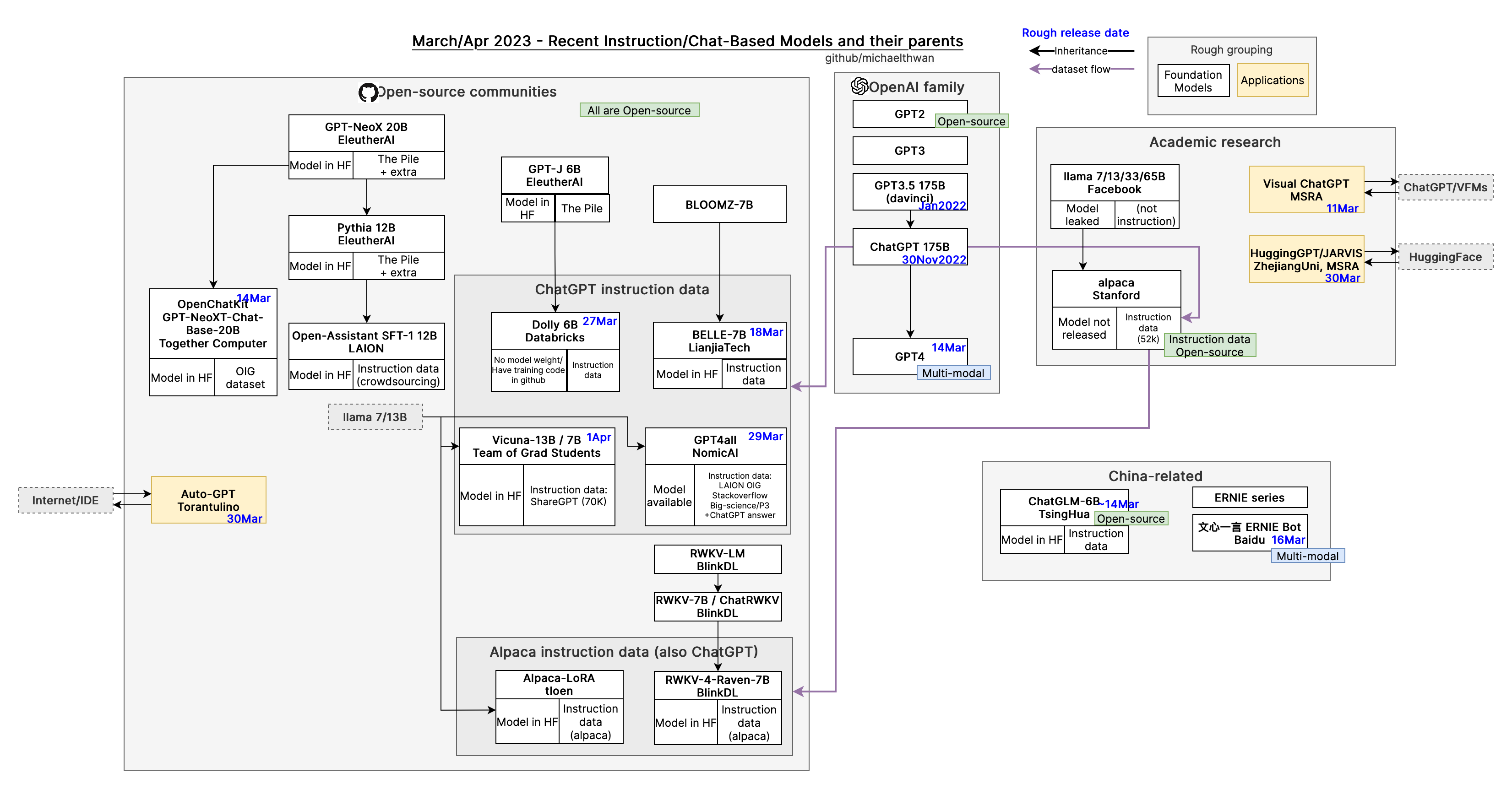

LLM Families

A more comprehensive list of models:

2023 LifeArchitect.ai data (shared)

Comparisons

hyperopt:

Does anyone know of any good test suites we can use to benchmark these local models? [...]aiappreciator:

The simplest and quickest benchmark is to do a rap battle between GPT-4 and the local models. [...]It is instantly clear how strong the model is relative to GPT-4.

Interesting Articles

- We Have No Moat, And Neither Does OpenAI, a leaked internal Google document about the success of open source models and how to change their approach against OpenAI

- Kinds of Stealing

Links

- Awesome-LLM, a curated list of papers about large language models, especially relating to ChatGPT. It also contains frameworks for LLM training, tools to deploy LLM, courses and tutorials about LLM and all publicly available LLM checkpoints and APIs.

- LLM Tracker, a spreadsheet detailing the different LLMs and their properties

- Brex's Prompt Engineering Guide

- The Secret Sauce behind 100K context window in LLMs: all tricks in one place

- Signs of AI Writing